DecryptedTech's new Enterprise Testing Lab Featured

Written by Sean KalinichReading time is around minutes.

DecryptedTech is now moving into Enterprise class testing. To accomplish this we have built a small Enterprise class network in our lab complete with two iSCSI SANs , TWO NAS Devices, multiple Gigabit Switches, and two ESX Hosts with Multiple VMs to keep things interesting. We will begin testing Enterprise class hardware and Software. We will be looking at these products with an eye on how the technology differs from the average consumer class products as well as how this technology will benefit the consumer as it trickles down to their market space. We do have our first product in the lab right now, but before we kick that off let’s talk about the new DecryptedTech Enterprise class Lab in detail.

DecryptedTech is now moving into Enterprise class testing. To accomplish this we have built a small Enterprise class network in our lab complete with two iSCSI SANs , TWO NAS Devices, multiple Gigabit Switches, and two ESX Hosts with Multiple VMs to keep things interesting. We will begin testing Enterprise class hardware and Software. We will be looking at these products with an eye on how the technology differs from the average consumer class products as well as how this technology will benefit the consumer as it trickles down to their market space. We do have our first product in the lab right now, but before we kick that off let’s talk about the new DecryptedTech Enterprise class Lab in detail.

The Switches -

The backbone of our lab consists of five Gigabit Switches. Two of these are from TRENDNet TEG-160WS and the TEG-240WS. Both of these are Web Smart Managed switches and have 2GB trunks setup between the two for faster switching between them. Next we have a TRENDNet TPE-80WS POE (Power over Ethernet) 8 Port Gigabit switch which offers quite a bit more controls than the TEG line and is our master switch for the RSTP (Rapid Spanning Tree Protocol ) topology ion place. Our second vendor in the lab is NETGEAR, they have provided us with their ProSafe GS110TP POE 10 port Gigabit Switch (two of these ports are fiber uplink) and a GS108T 8 Port Gigabit Switch. As we mentioned the switches are part of an RSTP topology and each one has different components attached to ensure that the loads is distributed across the network backbone.

The Storage -

Our Lab has three NAS devices one of which is fully iSCSI capable (and works with VMWare) the two non-iSCSI NAS devices are the Seagate Black Armour 440 and a Thecus 5200 Pro. The Thecus 5200 Pro has 3TB of space and serves as an indirect file server while the BA-440 has 4TB and acts as a media storage server and backup target. The last NAS on the list is a Synology DS 201, this has a full 1TB of space and holds image files used for deployment of VMs and the installation of software into the virtual environment.

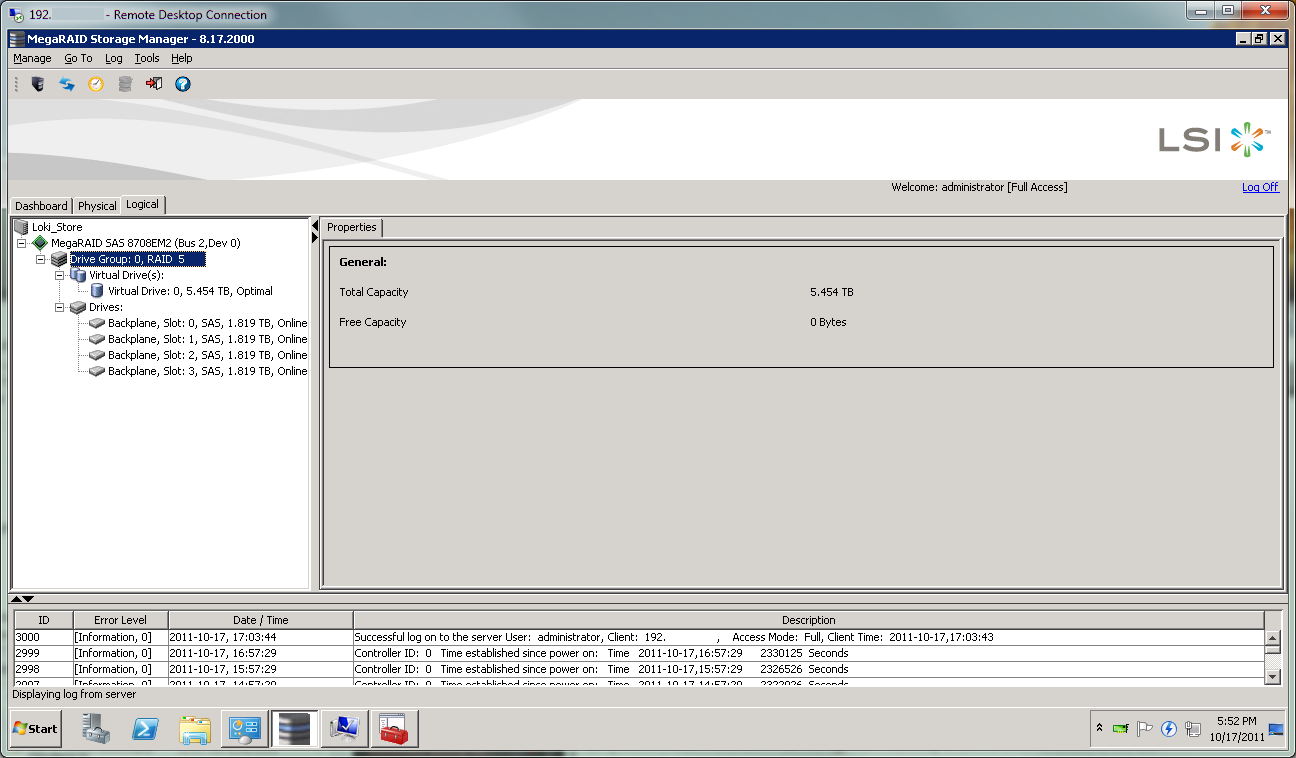

The last storage box we are rather proud to have. It is a custom built NAS/SAN with an AMD Phenom II x4 910e 4GB of memory on the Minix 890GX MiniITX motherboard and a 250GB OS Drive. For the OS we dropped in Windows 2008 R2 Storage Server. Of course that is not the thing that we are most proud of. For the actual storage we went with 4 Seagate 2TB Constellation ES Nearline SAS 2.0 drives (ST32000444SS) running in RAID 5 on an LSI MegaRAID SAS 8708EM2 SAS 6GB/s PCIe controller. It is this device with its two teamed NICs that provides the central iSCSI based storage for our VMWare cluster.

The VMWare Cluster -

To make sure that we covered all of our bases we built two VMware ESX Hosts for a single cluster; one of them with Intel Xeons and the other featuring AMD Magny Cours CPUs. Both of these systems have Kingston Server Premier Memory installed (128GB between the two systems). The motherboards in each are from Asus and represent the mid-range of their server line up.

The Intel System specs are as follows;

2x Intel Xeon L5530 2.4GHz CPUs

48GB of Kingston Server Premier RAM (6 x8GB)

2x Kingston SSD Now 128GB drives in RAID 1 (for the ESX Host Software)

Asus Z8NA-D6 motherboard

Cooler Master UCP 1100 Power Supply

The AMD half of the Cluster looks like this

2x AMD Opteron 6176 SE CPUs (12 Cores each for 24 physical cores)

92GB of memory (80GB Kingston Server Premier 10 x 8GB and 12GB Kingston Value Select Server memory 6 x 2GB)

2 x Seagate 500 GB Savio II SAS 2.0 Drives in RAID 1

Asus KGPE-D16 Motherboard

Cooler Master UCP 1100 Power Supply

The cluster is running VMMware ESX 4.1 (moving to 5.0 soon) and currently hosts 30 Virtual Machines all stored on our Custom Built NAS/SAN. Not all of these systems are powered on 24/7 (my power bill would be outrageous) but they are all on and operational when we have hardware in the lab that needs testing. Under normal conditions about 7 servers are live. These include an exchange cluster (Database Availability Group), a SQL server and a virtualized domain controller. Some of the other servers that run when under testing conditions are, two additional SQL servers (SharePoint and CRM) a two node SharePoint farm, a Xen Desktop test setup with three desktops, a webserver with a full copy of DecryptedTech on it) and virtualized Windows 2008 R2 domain controller. We feel this should be able to simulate the load of a fairly average business network.

In addition to the virtual systems there is a standalone Domain Controller (Windows 2008 R2) and a complete Microsoft Forefront Treat Management Gateway to control external access to the test environment.

In all the testing lab has taken a giant leap forward and we hope to be able to bring you some in-depth reviews of hardware and software that while outside the average consumer range will give you a glimpse of what will be coming down the road for the consumer market in the not so distant future.

Discuss this in our Forum

Latest from Sean Kalinich

- ConnectWise Slash and Grab Flaw Once Again Shows the Value of Input Validation We talk to Huntress About its Impact

- Social Manipulation as a Service – When the Bots on Twitter get their Check marks

- To Release or not to Release a PoC or OST That is the Question

- There was an Important Lesson Learned in the LockBit Takedown and it was Not About Threat Groups

- NetSPI’s Offensive Security Offering Leverages Subject Matter Experts to Enhance Pen Testing

Leave a comment

Make sure you enter all the required information, indicated by an asterisk (*). HTML code is not allowed.